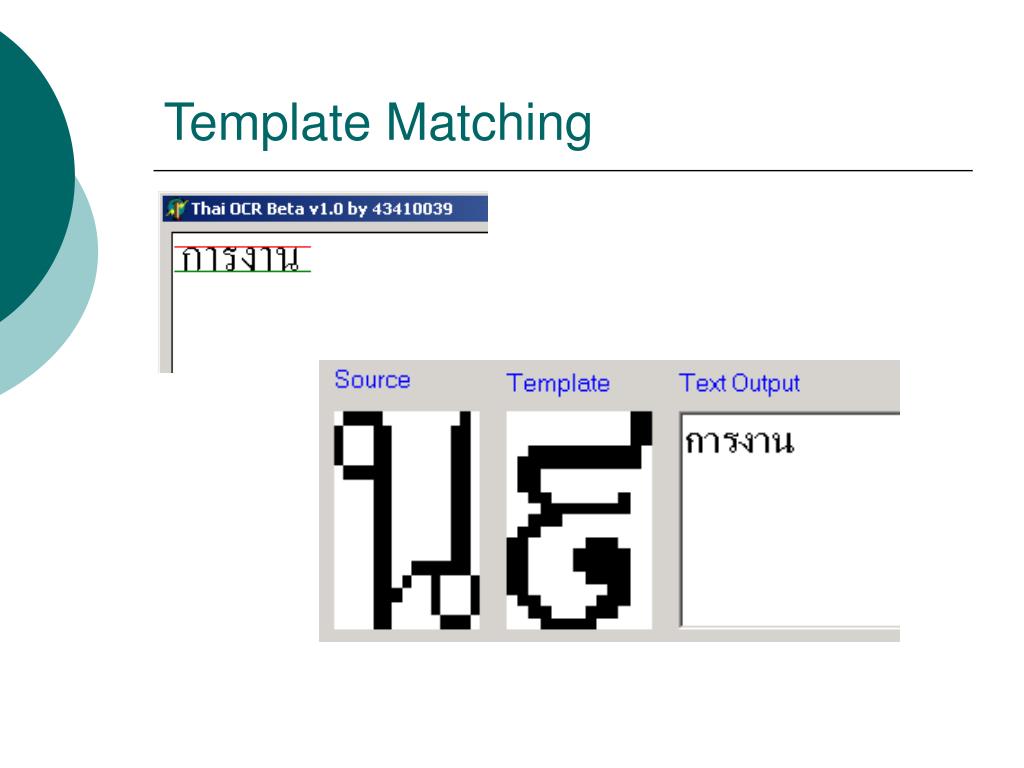

Template matching algorithm for ocr

Optical Character Recognition based on Template Matching. Global Journal of Computer Science and Technology. An approach for reducing morphological operator dataset and recognize Optical Character based on significant features. A novel approach for automatic number plate recognition.

In this paper, innovative method is proposed for number plate recognition. It uses series of image manipulations to recognize number plates. It uses algorithms in order to do the same. For plate … Expand. So, we're looking for the following image: The template to search for Thats' the "template" we're looking for in the original image. Matching Template matching works by "sliding" the template across the original image. The technique It does this matching by calculating a number.

How OpenCV does template matching When you perform template matching in OpenCV, you get an image that shows the degree of "equality" or correlation between the template and the portion under the template. The technique The template is compared against its background, and the result of the calculation a number is stored at the top left pixel.

Here's what the actual "correlation map" looks like: The result of template matching The greater the intensity, the greater the correlation between the template and the portion.

On with the code Enough of theory, now we begin with the code. First, add these header files to the code: include "cv. All about biological neurons Read more. Capturing images Read more. Connected Component Labelling Read more.

Utkarsh Sinha created AI Shack in and has since been working on computer vision and related fields. He is currently at Microsoft working on computer vision. Follow utkarshsinha. Get started Get started with OpenCV Track a specific color on video Learn basic image processing algorithms How to build artificial neurons? Look at some source code. AI Shack. Created by Utkarsh Sinha.

By image. N2 means the total of pixels in each alternately adding and subtracting the distance window which called as W. The maximum and values along the appropriate axis about the minimum filters are two order filters that can be center point, a region in the shape of a cube is used in filtering the image.

The maximum filter formed. A possible alternative would be to selects the largest value within an ordered define a point and a radius, and subsequently window of pixel values, whereas the minimum describe a sphere as the region. The origin for filter selects the smallest value. Reference point image is the threshold technique. There a lot of regions are defined to correspond to the techniques for threshold the image such as the locations of the sensors when gestures are minimum threshold, maximum threshold, executed.

A threshold is set which each pixel is compared to Filtering is one of the processes in other pixels. If the pixel is greater than or equal image processing before further steps are taken.

Otherwise it is outputted as a 0. Threshold substances are removed from a mixture of converts each pixel into black, white or elements, thus leaving useful material behind.

There are many types of filtering the image. Some of the filtering types are such as the minimum filtering, maximum filtering, median filtering, average filtering and others. All of them have their own algorithm. This algorithm is called average For this system, the recognition process is done threshold technique.

The average threshold using step by step and the user has to click the technique is based on the average value of the buttons which are providing in the system. The image. Every point is the pixels value of the character recognition process is started with image. The value of each point is added and it entering the image that the user wants to test divided by the number of points that is counted and it displayed at the box that was provided in for each image.

This is important to get the the system. The fundamental method of calculating the image correlation is so called cross-correlation , which essentially is a simple sum of pairwise multiplications of corresponding pixel values of the images. Though we may notice that the correlation value indeed seems to reflect the similarity of the images being compared, cross-correlation method is far from being robust.

Its main drawback is that it is biased by changes in global brightness of the images - brightening of an image may sky-rocket its cross-correlation with another image, even if the second image is not at all similar. Normalized cross-correlation is an enhanced version of the classic cross-correlation method that introduces two improvements over the original one:. Let us get back to the problem at hand. Having introduced the Normalized Cross-Correlation - robust measure of image similarity - we are now able to determine how well the template fits in each of the possible positions.

We may represent the results in a form of an image, where brightness of each pixels represents the NCC value of the template positioned over this pixel black color representing the minimal correlation of All that needs to be done at this point is to decide which points of the template correlation image are good enough to be considered actual matches.

Usually we identify as matches the positions that simultaneously represent the template correlation:. It is quite easy to express the described method in Adaptive Vision Studio - we will need just two built-in filters. We will compute the template correlation image using the ImageCorrelationImage filter, and then identify the matches using ImageLocalMaxima - we just need to set the inMinValue parameter that will cut-off the weak local maxima from the results, as discussed in previous section.

Though the introduced technique was sufficient to solve the problem being considered, we may notice its important drawbacks:. In the next sections we will discuss how these issues are being addressed in advanced template matching techniques: Grayscale-based Matching and Edge-based Matching.

Grayscale-based Matching is an advanced Template Matching algorithm that extends the original idea of correlation-based template detection enhancing its efficiency and allowing to search for template occurrences regardless of its orientation. Edge-based Matching enhances this method even more by limiting the computation to the object edge-areas. In this section we will describe the intrinsic details of both algorithms.

In the next section Filter toolset we will explain how to use these techniques in Adaptive Vision Studio. Image Pyramid is a series of images, each image being a result of downsampling scaling down, by the factor of two in this case of the previous element. Image pyramids can be applied to enhance the efficiency of the correlation-based template detection. The important observation is that the template depicted in the reference image usually is still discernible after significant downsampling of the image though, naturally, fine details are lost in the process.

Therefore we can identify match candidates in the downsampled and therefore much faster to process image on the highest level of our pyramid, and then repeat the search on the lower levels of the pyramid, each time considering only the template positions that scored high on the previous level.

At each level of the pyramid we will need appropriately downsampled picture of the reference template, i.

Comments

Post a Comment